Robert Collins

Penn State

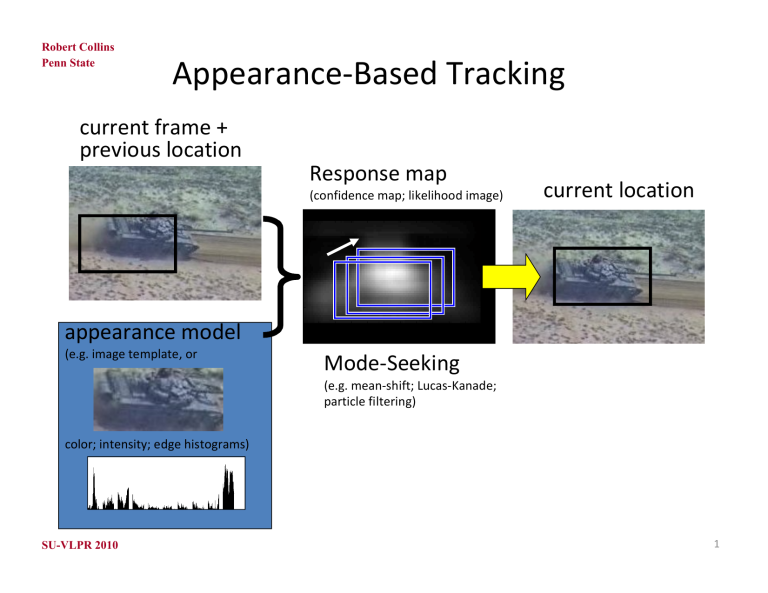

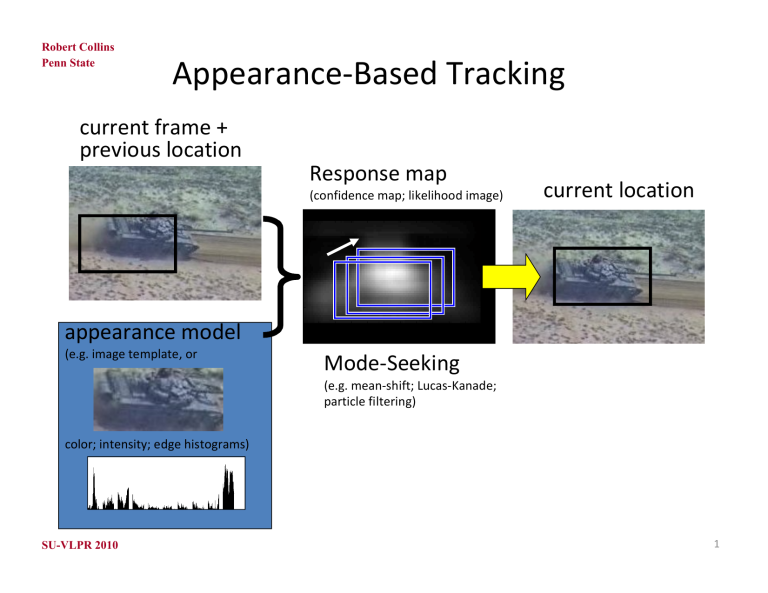

Appearance-Based Tracking

current frame +

previous location

Response map

(confidence map; likelihood image)

current location

appearance model

(e.g. image template, or

Mode-Seeking

(e.g. mean-shift; Lucas-Kanade;

particle filtering)

color; intensity; edge histograms)

SU-VLPR 2010

1

Robert Collins

Penn State

Motivation for Online Adaptation

First of all, we want succeed at persistent, long-term tracking!

The more invariant your appearance model is to variations in

lighting and geometry, the less specific it is in representing a

particular object. There is then a danger of getting confused with

other objects or background clutter.

Online adaptation of the appearance model or the features used

allows the representation to have retain good specificity at each

time frame while evolving to have overall generality to large

variations in object/background/lighting appearance.

SU-VLPR 2010

2

Robert Collins

Penn State

Tracking as Classification

Idea first introduced by Collins and Liu, “Online Selection of

Discriminative Tracking Features”, ICCV 2003

• Target tracking can be treated as a binary classification

problem that discriminates foreground object from scene

background.

• This point of view opens up a wide range of classification and

feature selection techniques that can be adapted for use in

tracking.

SU-VLPR 2010

3

Robert Collins

Penn State

Overview:

Foreground

samples

foreground

Background

samples

background

New

samples

Classifier

Estimated location

SU-VLPR 2010

Response map

New frame

4

Robert Collins

Penn State

Observation

Tracking success/failure is highly correlated with our

ability to distinguish object appearance from background.

Suggestion:

Explicitly seek features that best discriminate between object

and background samples.

Continuously adapt feature used to deal with changing background,

changes in object appearance, and changes in lighting conditions.

Collins and Liu, “Online Selection of

Discriminative Tracking Features”, ICCV 2003

SU-VLPR 2010

5

Robert Collins

Penn State

Feature Selection Prior Work

Feature Selection: choose M features from N candidates (M << N)

Traditional Feature Selection Strategies

•Forward Selection

•Backward Selection

•Branch and Bound

Viola and Jones, Cascaded Feature Selection for Classification

Bottom Line: slow, off-line process

SU-VLPR 2010

6

Robert Collins

Penn State

Evaluation of Feature Discriminability

Can think of this as

nonlinear,“tuned”

feature, generated

from a linear seed

feature

Object Background

Object

Feature Histograms

Variance Ratio

(feature score)

Var between classes

Var within classes

Background

+

0

_

Log Likelihood Ratio

Object

Likelihood Histograms

Note: this example also explains why we don’t just use LDA

SU-VLPR 2010

7

Robert Collins

Penn State

Example: 1D Color Feature Spaces

Color features: integer linear combinations of R,G,B

(a R + b G + c B) + offset where a,b,c are {-2,-1,0,1,2} and

offset is chosen to bring result

(|a|+|b|+|c|)

back to 0,…,255.

The 49 color feature candidates roughly uniformly

sample the space of 1D marginal distributions of RGB.

SU-VLPR 2010

8

Robert Collins

Penn State

Example

training frame

foreground

test frame

background

sorted variance ratio

SU-VLPR 2010

9

Robert Collins

Penn State

Example: Feature Ranking

Best

Worst

SU-VLPR 2010

10

Robert Collins

Penn State

Overview of Tracking Algorithm

Log Likelihood Images

Note: since log likelihood images contain negative

values, must use modified mean-shift algorithm as

described in Collins, CVPR’03

SU-VLPR 2010

11

Robert Collins

Penn State

Naive Approach to Handle Change

• One approach to handle changing appearance over

time is adaptive template update

• One you find location of object in a new frame, just

extract a new template, centered at that location

• What is the potential problem?

SU-VLPR 2010

12

Robert Collins

Penn State

Drift is a Universal Problem!

1 hour

Example courtesy of Horst Bischof. Green: online boosting tracker; yellow: drift-avoiding

“semisupervised boosting” tracker (we will discuss it later today).

SU-VLPR 2010

13

Robert Collins

Penn State

Template Drift

• If your estimate of template location is slightly off, you

are now looking for a matching position that is similarly

off center.

• Over time, this offset error builds up until the template

starts to “slide” off the object.

• The problem of drift is a major issue with methods that

adapt to changing object appearance.

SU-VLPR 2010

14

Robert Collins

Penn State

Anchoring Avoids Drift

This is an example of a general

strategy for drift avoidance

that we’ll call “anchoring”.

The key idea is to make sure

you don’t stray too far from

your initial appearance model.

Potential drawbacks?

[answer: You cannot accommodate

very LARGE changes in appearance.]

SU-VLPR 2010

15

Robert Collins

Penn State

Avoiding Model Drift

Drift: background pixels mistakenly incorporated into the object model

pull the model off the correct location, leading to more misclassified

background pixels, and so on.

Our solution: force foreground object distribution to be a combination

of current appearance and original appearance (anchor distribution)

anchor distribution = object appearance histogram from first frame

model distribution = (current distribution + anchor distribution) / 2

Note: this solves the drift problem, but limits the ability of the

appearance model to adapt to large color changes

SU-VLPR 2010

16

Robert Collins

Penn State

Examples: Tracking Hard-to-See Objects

Trace of selected features

SU-VLPR 2010

17

Robert Collins

Penn State

Examples: Changing Illumination / Background

Trace of selected features

SU-VLPR 2010

18

Robert Collins

Penn State

Examples: Minimizing Distractions

Current location

Feature scores

Top 3 weight (log likelihood) images

SU-VLPR 2010

19

Robert Collins

Penn State

More Detail

top 3 weight (log likelihood) images

SU-VLPR 2010

20

Robert Collins

Penn State

On-line Boosting for Tracking

Grabner, Grabner, and Bischof, “Real-time tracking via on-line

boosting.” BMVC 2006.

Use boosting to select and maintain the best discriminative

features from a pool of feature candidates.

• Haar Wavelets

• Integral Orientation Histograms

• Simplified Version of Local Binary Patterns

SU-VLPR 2010

21

Robert Collins

Penn State

From Daniel Vaquero, UCSB

Adaboost learning

• Adaboost creates a single strong classifier

from many weak classifiers

• Initialize sample weights

• For each cycle:

– Find a classifier that performs well on the

weighted sample

– Increase weights of misclassified examples

• Return a weighted combination of

classifiers

SU-VLPR 2010

Robert Collins

Penn State

OFF-line Boosting for Feature Selection

– Each weak classifier corresponds to a feature

– train all weak classifiers - choose best at each boosting iteration

– add one feature in each iteration

labeled

training samples

weight distribution over all training

samples

train each feature in the feature pool

chose the best one (lowest error)

and calculate voting weight

update weight distribution

train each feature in the feature pool

chose the best one (lowest error)

and calculate voting weight

strong classifier

update weight distribution

iterations

train each feature in the feature pool

chose the best one (lowest error)

and calculate voting weight

Horst Bischof

SU-VLPR 2010

23

Robert Collins

Penn

State

Samples

are

patches

+

-

On-line Version…

one

traning

sample

inital

importance

hSelector1

hSelector2

hSelectorN

h1,1

h2,1

hN,1

h1,2

h2,2

hN,2

.

.

.

estimate

importance

.

.

.

estimate

importance

.

.

.

hN,m

h2,m

.

.

.

h1,M

h2,M

hN,M

update

update

update

current strong classifier hStrong

Horst Bischof

SU-VLPR 2010

repeat for each

trainingsample

24

Robert Collins

Penn State

Tracking Examples

Horst Bischof

SU-VLPR 2010

25

Robert Collins

Penn State

Ensemble Tracking

Avidan, “Ensemble Tracking,” PAMI 2007

Use online boosting to select and maintain a set of weak

classifiers (rather than single features), weighted to form a

strong classifier. Samples are pixels.

Each weak classifier is a linear

hyperplane in an 11D feature space

composed of R,G,B color and a

histogram of gradient orientations.

Classification is performed at each pixel, resulting in a dense

confidence map for mean-shift tracking.

SU-VLPR 2010

26

Robert Collins

Penn State

Ensemble Tracking

During online updating:

• Perform mean-shift, and extract new pos/neg samples

• Remove worst performing classifier (highest error rate)

• Re-weight remaining classifiers and samples using AdaBoost

• Train a new classifier via AdaBoost and add it to the ensemble

Drift avoidance: paper suggests keeping some “prior” classifiers

that can never be removed. (Anchor strategy).

SU-VLPR 2010

27

Robert Collins

Penn State

Semi-supervised Boosting

Grabner, Leistner and Bischof, “Semi-Supervised On-line

Boosting for Robust Tracking,” ECCV 2008.

Designed specifically to address the drift problem. It is

another example of the Anchor Strategy.

Basic ideas:

• Combine 2 classifiers

Prior (offline trained) Hoff and online trained Hon

Classifier Hoff + Hon cannot deviate too much from Hoff

• Semi-supervised learning framework

SU-VLPR 2010

28

Robert Collins

Penn State

Supervised learning

+

-

+

-

Maximum margin

Horst Bischof

SU-VLPR 2010

29

Robert Collins

Penn State

Can Unlabeled Data Help?

?

?

?

? ? ? ?

?

? ? ?

?

?

? +? ?

? ?

?

?

?

?

?

?

?

?

?

? ? +

?

?

?

?

?

? ? ?

? density

low

around

?

?

decision

? ?

boundary

Horst Bischof

SU-VLPR 2010

30

Robert Collins

Penn State

Drift Avoidance

Key idea: samples from new frame

are only used as unlabeled data!!!

+ + +

?

-?

??

? ? ?

?

?

Labeled data

comes from

first frame

?-

Combined

classifier

Horst Bischof

SU-VLPR 2010

31

Robert Collins

Penn State

Drift Avoidance

Key idea: samples from new frame

are only used as unlabeled data!!!

? ? ?

DYNAMIC

?

?

?

?

?

+ + +

FIXED

-?

Labeled data

comes from

first frame

STABLE

?-

Combined

classifier

Horst Bischof

SU-VLPR 2010

32

Robert Collins

Penn State

Examples

Green: online boosting

Yellow: semi-supervised

Horst Bischof

SU-VLPR 2010

33

Robert Collins

Penn State

Bag of Patches Model

Lu and Hager, “A Nonparametric Treatment for Location

Segmentation based Visual Tracking,” CVPR 2007.

Key Idea: rather than try to maintain a set of features or set of

classifiers, appearance of foreground and background is

modeled directly by maintaining a set of sample patches.

KNN then

determines the

classification of

new patches.

SU-VLPR 2010

34

Robert Collins

Penn State

Drift Avoidance (keep patch model clean)

Given new patch samples to add to foreground and background:

• Remove ambiguous patches (that match both fg and bg)

• Trim fg and bg patches based on sorted knn distances.

Remove those with small distances (redundant) as well as large

distances (outliers).

• Add clean patches to existing bag of patches.

• Resample patches, with probability of survival proportional to

distance of a patch from any patch in current image (tends to

keep patches that are currently relevant).

SU-VLPR 2010

35

Robert Collins

Penn State

Sample Results

Extension to video segmentation.

See paper for the details.

SU-VLPR 2010

36

Robert Collins

Penn State

Segmentation-based Tracking

This brings up a second general scheme for drift avoidance

besides anchoring, which is to perform fg/bg segmentation.

In principle, it is could be a better solution, because your model is

not constrained to stay near one spot, and can therefore handle

arbitrarily large appearance change.

Simple examples of this strategy use motion segmentation

(change detection) and data association.

SU-VLPR 2010

37

Robert Collins

Penn State

Segmentation-based Tracking

Yin and Collins. “Belief propagation in a 3d spatio-temporal MRF for

moving object detection.” CVPR 2007.

Yin and Collins. “Online figure-ground segmentation with edge pixel

classification.” BMVC 2008.

SU-VLPR 2010

38

Robert Collins

Penn State

Segmentation-based Tracking

Yin and Collins. “Shape constrained figure-ground segmentation and

tracking.” CVPR 2009.

SU-VLPR 2010

39

Robert Collins

Penn State

Tracking and Object Detection

Another way to avoid drift is to couple an object detector

with the tracker.

Particularly for face tracking or pedestrian tracking, a

detector is sometimes included in the tracking loop

e.g. Yuan Li’s Cascade Particle Filter (CVPR 2007)

or K.Okuma’s Boosted Particle Filter (ECCV 2004).

• If detector produces binary detections (I see three faces:

here, and here, and here), use these as input to a data

association algorithm.

• If detector produces. a continuous response map, use that as

input to a mean-shift tracker.

SU-VLPR 2010

40

Robert Collins

Penn State

Summary

Tracking is still an active research topic.

Topics of particular current interest include:

• Multi-object tracking (including multiple patches on one object)

• Synergies between

Classification and Tracking

Segmentation and Tracking

Detection and Tracking

All are aimed at achieving long-term persistent tracking in

ever-changing environments.

SU-VLPR 2010

41